Protecting your practice: a guide to the privacy of AI notetakers

As mental health professionals, we navigate a delicate balance every day. We create safe spaces where clients can share their deepest vulnerabilities, while safeguarding their trust through confidentiality. It's a sacred covenant that forms the foundation of therapeutic healing.

Yet today, many of us find ourselves at a crossroads. The promise of AI-powered tools to reduce administrative burden and return our focus to client care is undeniably attractive. These tools offer a path away from documentation fatigue and back to the heart of why we became therapists in the first place: to be fully present with our clients.

But this promise comes with legitimate concerns. When we invite technology into our practice, are we inadvertently compromising the very confidentiality we've sworn to uphold? Are we trading convenience for privacy?

Many therapists experience a profound unease at this possibility. And, unfortunately, many of those concerns are founded. Many of the leading AI platforms for therapists have written their terms of service and privacy policies in a way that gives them carte blanche to use your practice’s data as they wish, potentially selling it to data brokers. Even Sam Altman, the technologist behind ChatGPT, has warned that AI does not, by default, come with legal privilege.

The good news is that you don't have to choose between efficiency and ethics. By understanding the landscape of data privacy in therapy technology, you can make informed choices that honor both your need for support and your commitment to client confidentiality.

This guide — fact checked by a data privacy expert — will walk you through the critical privacy considerations when evaluating any AI-powered clinical tool. We hope it helps you ask the right questions before entrusting your practice, and your clients' most personal moments, to a technology partner.

How to tell if a tool is private

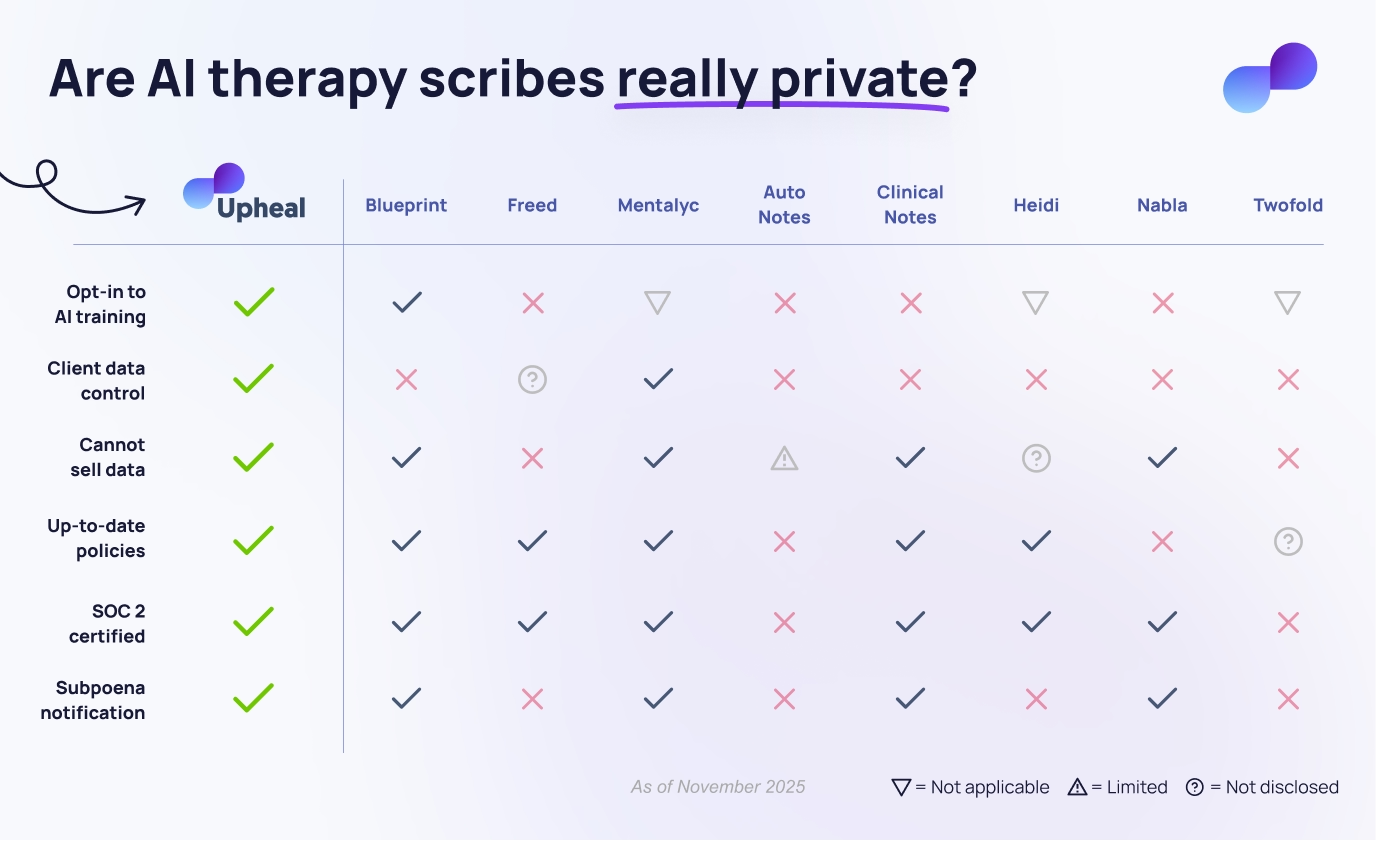

No two privacy policies are the same. We brought together experts in AI, clinical ethics, and privacy law to figure out what to look for in an AI-powered medical scribe. That led us to identify six key criteria against which a privacy policy can be judged:

- Users have to opt-in before their data is used to train the AI tool.

- Users have the power to opt-out of de-identified data being used to improve the product.

- The terms do not allow the company to sell data collected.

- Privacy policies are actively maintained and frequently (at least annually) updated.

- The company maintains SOC 2-certified data privacy practices.

- Therapists — where legally possible — remain in control in responding to a subpoena or government request for information.

Read on for a more detailed description of each criterion, as well as a current round-up of leading tools.

1. Explicit consent for model training

When you document a session using an AI notetaker, that deeply personal narrative doesn't simply disappear after your note is generated. Many companies reserve the right to use that data — your client's most vulnerable moments — to train their AI models. The therapeutic space holds some of our clients' most vulnerable disclosures, and the question of who controls how these stories are used touches the very heart of our ethical practice.

The key distinction isn't whether AI learning happens (after all, continuous improvement benefits everyone) but whether clients and therapists are transparently informed, and maintain meaningful choice and control over their participation in that learning process.

What to look for

If you are concerned with how session recordings might be used, you may want to consider tools that allow you to generate notes without recording and storing sessions at all.

Otherwise, review the privacy policy for explicit language about data usage for AI training. Look for terms like "model training," "service improvement," or "algorithm development." The policy should clearly state:

- Whether client session data is used for AI training

- Whether participation is consent-based (opt-in), or automatic (opt-out)

- How and if the data is de-identified and protected

- What specific information is retained

- How long the data is kept

The gold standard is explicit opt-in consent — where nothing is used for training unless you actively choose to participate — rather than opt-out models where your data is used by default unless you take action.

Comparing AI tools

Freed and ClinicalNotes state they use de-identified client data to train models.

Mentalyc, Twofold, and Heidi state they do not use client data to train models.

Other platforms like Nabla and Autonotes have terms in their privacy policy that would implicitly allow them to use data for training without clearly communicating this to users.

Since we first published this guide, Blueprint has come out to state that they do not use your data to train their models. They have not publicly disclosed how models are trained.

Upheal requires explicit opt-in consent before any recorded session data is used for training purposes. This consent-first approach means your and your clients' session data is never used to train AI models unless you've both made an active, informed choice to participate. Additionally, all data is thoroughly de-identified before being processed for training purposes according to rigorous standards detailed in Upheal's privacy policy. Upheal provides a consent management framework to support providers in their collection of consent to use Upheal and opt-in to model training.

2. Allowing clients to opt out

Client autonomy doesn't end when technology enters the therapy room. Just as clients can request certain information be kept out of their formal records, they should have a say in how their data is used by technology providers. The power to opt-out of data being used to improve a product isn’t just a nice feature — it’s an extension of therapeutic ethics in digital form.

What to look for

Seek language that explicitly outlines opt-out procedures. The policy should address:

- How to initiate an opt-out request

- What specific data usages can be opted out of

- Whether opting out affects service functionality

- Confirmation processes for opt-out requests

Be wary of policies that make opting out difficult or impossible, or that bury opt-out information in dense legal language.

Comparing AI tools

The following tools do not disclose if they allow clients to opt out of their data being used to improve the platform, but do follow local laws allowing users to opt out of marketing communications:

Freed does not share how clients manage how their data is used. They do have a procedure for deleting client data, but it has to be done by the therapist.

Upheal does not require client's to opt-in to the use of session data for the training of models. If clients do want to share their data, Upheal collects explicit opt-in consent through providers before de-identifying and using it.

3. Selling session data

Perhaps the most troubling practice in the therapy tech space is the selling of data. When companies monetize session information — even in aggregated form — they transform sacred therapeutic exchanges into commercial assets. In some cases, de-identified data is brokered en masse, to the tune of millions of dollars, to train experimental chatbots that would replace therapists.

What to look for

Examine the policy for language about data sharing with third parties, particularly for commercial purposes. Red flags include:

- Vague terms about "business partners" or "affiliates"

- Permission to share data for "business purposes" without clear definition

- Language about data as a company asset that can be transferred during acquisition

- Absence of explicit promises never to sell data

The strongest policies will contain a clear, unequivocal statement that client data will never be sold or shared for commercial purposes.

Comparing common tools

Freed and Twofold all have terms that explicitly grant them the right to transfer aggregate data for the technology company's benefit.

AutoNotes also reserves that right, but limits usage to research purposes.

Heidi is silent on whether they sell user data.

Upheal, Mentalyc, Clinical Notes and Blueprint all explicitly state that they do not sell personal information.

4. Up-to-date privacy policies

The digital privacy landscape evolves constantly, with new regulations, threats, and best practices emerging regularly. A company's commitment to maintaining current privacy policies reflects their overall dedication to protection of clients. Outdated policies may indicate neglect of privacy concerns or failure to adapt to emerging standards.

What to look for

When considering a tool, check how current their privacy policy is by looking for:

- Last update date (should be within the last year)

- References to current regulations (HIPAA, GDPR, CCPA, etc.)

- Clear version history or change documentation

- Proactive notification systems for policy updates

Companies with regularly updated policies demonstrate ongoing commitment to privacy protection.

Comparing common tools

The following tools have recently updated their current privacy policy:

- Blueprint (May 2025)

- Upheal (November 2025)

- Heidi (October 2024)

- Freed (September 2024)

- ClinicalNotes (September 2024)

Nabla has not updated its privacy policy since May 2023.

Neither AutoNotes nor Twofold share the date of their most recent update, which makes it a little more difficult to track changes transparently. Looking at public archives of AutoNotes’ website, they appear to still be using the same policy from April 2023.

5. SOC 2 Certification

Many companies claim HIPAA or GDPR compliance, but these claims often rely on self-assessment rather than external verification. Third-party accreditations like SOC 2 (Type II), by contrast, require rigorous independent auditing of security practices, providing objective confirmation of a company's privacy protections.

What to look for

Search for:

- Explicit mention of a SOC 2 (Type II) report (not just "compliance")

- Information about independent auditors

- Recency of initial report and annual accreditation

- Willingness to share the SOC 2 (Type II) report upon request

Companies that are truly committed to their security practices will prominently display information rather than hiding behind vague compliance claims.

Comparing common tools

Most legitimate tools report holding SOC 2 (Type II):

- Upheal

- ClinicalNotes

- Heidi

- Freed

- Blueprint

- Nabla

- Mentalyc

Twofold and AutoNotes do not appear to have any such certifications.

6. Subpoena notification

As therapists, we understand the limited circumstances under which we might be legally compelled to break confidentiality. When client information resides on third-party servers, those parties might also be compelled to share your clients’ data. But in crucial cases like these, sensitive information should be shared at the discretion of the therapist — not a tech company.

Legally, there are narrow cases where a company can be forced to share data without notifying the therapist. But in most cases, they can (and should!) notify the therapist so that they may be involved in responding to the subpoena.

What to look for

Examine policies for:

- Transparent disclosure about subpoena response processes

- Commitment to notifying clients of a subpoena request prior to releasing data

- Data minimization and storage practices that limit what can be subpoenaed

- Zero-knowledge or end-to-end encryption that technically limits access

Companies with strong privacy values will be honest about legal limitations while implementing technical and policy safeguards to maximize protection.

Comparing common tools

Freed share data at their own discretion for the purpose of a subpoena, “when [they] believe in good faith that disclosure is necessary.” They do not state if they notify the account holder.

Heidi, AutoNotes, and Twofold state they may disclose data to comply with legal requirements, but do not share if and how they would notify the clinician.

The following platforms all commit to notifying providers when served with a subpoena (except where they are legally required to not notify):

Upheal takes it a step further with product features that allow clinicians to separate notes and transcripts, ensuring that only minimally required information is disclosed to government agencies.

Choose a partner, not just a product.

In a field built on trust and confidentiality, the technology tools we choose reflect our deepest professional values. When evaluating AI-powered documentation tools, we're not just selecting software—we're choosing partners who will either honor or compromise our ethical commitments.

At Upheal, we build our platform with the understanding that we're guardians of therapy's most sacred element: the confidential therapeutic relationship. Our approach to privacy isn't an afterthought or marketing tactic—it's the foundation of everything we create.

We invite you to apply the standards in this guide to any technology you consider bringing into your practice, including ours. Ask the difficult questions. Read the privacy policies. Demand transparency. Your clients trust you with their most vulnerable moments, and you deserve technology partners who honor that trust as seriously as you do.

Because in the end, the most advanced AI in the world isn't worth compromising the fundamental promise we make to every client: what you share here is protected.

![Best scheduling software for therapists [2026]](https://cdn.prod.website-files.com/6328c07d72b05347cc236c49/68dd9ec24d044bac7d15aa1a_Best%20scheduling%20software%20thumbnail.avif)