I’m a therapist – should I try this AI thing?

As a coach, therapist-in-training, and writer at Upheal I felt the need to comment on the application of AI into the world of mental health. I’ve noticed that my peers are very divided. Like Marmite, it brings forth both haters and lovers. But I don’t think we need to sit at two extremes.

We’re looking at a new frontier, something along the lines of when we first took banking online, and everyone is always a little afraid of a new thing. But living in an online digital world with increasing technical powers always opens up possibilities, benefits, and risks at the same time.

The question is; is it worth it? I believe that it is, though there’s a lot that we need to consider, safeguard, and prepare for. That said, the potential is incredible. Will you join us?

How can AI impact the world of mental health?

In theory, AI could offer quicker and cheaper access than traditional mental health services, which suffer from staff shortages, long wait lists, and high costs. Some have already attempted to use AI as a replacement for, and the first step in, therapy.

Now, we at Upheal don't believe AI should replace therapists. Nor do we believe that AI is the answer to a broken social system. People deserve access to health care, mental or physical, and AI cannot be held responsible for healing those with serious mental health conditions or be seen on par with a human psychotherapist that has thousands of emotions, years of study, and life experience. (Robert Plutchik believed that humans can experience over 34,000 unique emotions but, ordinarily, they experience eight primary emotions.)

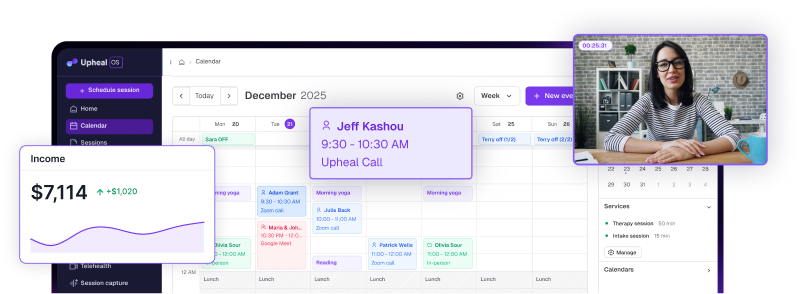

That said, we believe there is much potential for AI to help empower healing professionals’ working lives, observations, and therapeutic practices by automating administrative tasks and taking over large chunks of practice management. As we approach a new phase in technology’s reach, a certain amount of mistakes may happen – not necessarily out of the desire to sell data (though there will always be interested parties) but just because no one has done this before.

If we’re sold on the benefits and the vision of how AI could help enhance this field, we can take precautions together to create better circumstances for the good of all – both for those receiving therapy and those giving it.

Upheal’s security, privacy, and trust

We know data and privacy are extremely important. Any personal data is pseudonymized, and if access is needed it’s carefully controlled, logged, and limited to certain roles that require access (i.e. for troubleshooting) and can only be viewed once we’ve received approval.

We’ve also undergone external audits to ensure HIPAA compliance, GDPR compliance, and SOC 2 certification. We have not and will not use personal data for targeted advertising or sell data to third parties. Our privacy policy clearly outlines the purposes for which we collect data, and we are legally bound by our privacy policy and the Business Associate Agreement (BAA) we sign with you. So we couldn’t even if we tried.

But we agree that it is a matter of trust – and some companies such as Cerebral and BetterHelp haven’t exactly created an atmosphere of transparency and safety. We don’t want anyone’s data. We want to support mental health professionals and make progress in the applications of AI to this field.

Many of us on the Upheal team have had our own mental health journeys, and/or were attracted by working at a company that spends time on things that truly matter, and that add to our human experience in some way. Feel free to read about Juraj’s story or take a look at my own. Which is a nice segway into sharing that we have coaches and mental health professionals like Jan and Katka on the team (roles I contribute to myself).

My personal take

As someone who’s both been in therapy and provides coaching services, I have far too much respect for the process to ever consider AI to be able to replace a therapist. We are dealing with people’s traumas, years of conditioning, individual brain chemistry, unique upbringings, and more. Every single nuance matters and it’s not only about language.

As a big admirer of Peter Levine and somatic experiencing, I know that there is so much to be experienced on a physical level in therapy too. Being able to gradually articulate our experience with language is also a part of trauma work, though we have to work through both in the safe container that is our relationship with our therapist. And that means we may need to be held, to be paced with, to be shown how to stand or sit, to fall onto the ground of our therapy space, and weep. I don't know about you, but I can't imagine AI doing that with me just yet.

That said, trauma work is one of the areas I see Upheal as having tremendous potential. When we can capture video (and not just audio content) on Upheal we'll be able to help therapists record and analyze aspects of their client's body language. From micro-expressions, to tics, and trapped trauma or simply body language at odds with a client's verbal communication, there will be so many ways that video content may help the therapeutic practice.

Imagine showing your client a 15-second clip of a previous session, and saying, "See that? When you said you felt happy, but you grimaced and grabbed the chair?"

Psycho-education and training are two excellent and much-needed use cases that I can think of, though applications across various psychology modalities may reveal even more potential. Analyzing body movements and language will open a whole new area for us. And I think that's a good thing, because we must learn to reconnect to our bodies too, safely and responsibly, especially when dealing with trauma. It will be interesting to see how different therapists will approach this, and no doubt, we'll have to think carefully about how and when we should introduce such an "AI mirror" to our clients so as not to cause any harm.

What should AI have the right to do?

In the next few months (or years) we’ll have to figure out which aspects of therapy AI can and should be used for. Just like in other professions, AI can help support administrative tasks to free up the core skills of a particular workforce. In this case, a therapist is there to heal – not write notes or do unending amounts of paperwork. Therefore, progress notes and intake notes or aspects of practice management are things most wouldn't mind letting AI do for them.

Then there is the psychoeducation of clients, using AI for training purposes, and NLP or CBT, such as for restructuring cognitive distortions clearly detectable in speech by a language model. I can imagine a chatbot correcting all-or-nothing statements or other aspects of black-and-white thinking quite easily, and so helping with anxiety or depression. It could also help to track OCD compulsions; even allowing clients to log in and do homework and revisit their own sessions too. There is also potential for AI-powered therapist supervision which could provide feedback and assessment of a therapist’s work.

However, at least in the world of mental health, AI should always be used in combination with a human. It can observe, analyze, summarize, and auto-suggest – but it's up to a healing professional to evaluate, decide and prescribe.

So no AI therapist?

People have the right to choose what kind of service they want – and what quality level they’ll accept. But personally, I’d be surprised if an unregulated system occurred in which anyone could talk to any chatbot and be prescribed any kind of medication. This is not responsible, from a suicide risk point of view if nothing else, not to mention addiction and self-medication,(you could apply to several chatbots for medication and unless they all talk to each other there would be no check on that, leading to overdosing) avoidance, and many many other issues. While there may be a market and demand for such a thing, it is for the benefit of all to keep our experts at the helm, and not give into quick fixes that may cause potential harm.

Of course, there are some that may want an AI therapist, but that doesn’t mean we should provide healthcare services that are potentially damaging to the masses. Medication is absolutely necessary for some, but it’s not free candy – there are mindfulness techniques, journaling, support groups, exercise, dietary and other solutions to try before whipping out a prescription pad, or at least, should be done in combination. And as mentioned above, various health apps capable of prescribing medication would all need to be connected to one system.

AI already is affecting various aspects of our lives, businesses, and the online world. As our co-founder recently wrote, “Show us a business that isn’t busy trying to incorporate AI – it will be faster than searching for those who are”. The point is, that just like the internet, AI is here. Now it’s up to us to figure out how to work with it in a smart and responsible way. And if we allow those who wish to use therapy chatbots or services to do so in an unregulated way, it should always be transparent that a person is speaking to or engaging with artificial intelligence and not a human. That is their choice, and sometimes, something can be better than nothing – but we must always know what we’re dealing with.

Furthermore, healing requires being witnessed and at Upheal we believe that the more we can put the therapist into a space from which they can be present, the better they can do their jobs. So yes, please try this AI thing! And help us shape this transition with your knowledge and your experience, rather than rejecting it all together; there is so much that AI has to offer this space.