Should AI ever be the therapist?

The landscape shifted faster than anyone anticipated.

Just months ago, discussions about AI in mental health felt theoretical — a distant consideration for future conferences and ethics committees.

Then Slingshot AI launched Ash, positioning their $93 million language model as an actual AI therapist.

Character.AI faces wrongful death lawsuits after minors using "mental health counselor" chatbots died by suicide, as well as other harrowing claims.

States are banning AI-enabled behavioral health care outright.

And meanwhile, therapists continue leaving the field in unprecedented numbers due to unsustainable working conditions, creating care deserts just as demand reaches historic highs.

The question isn't coming anymore. It's here.

Should AI ever be the therapist?

The promise, and the uncomfortable reality

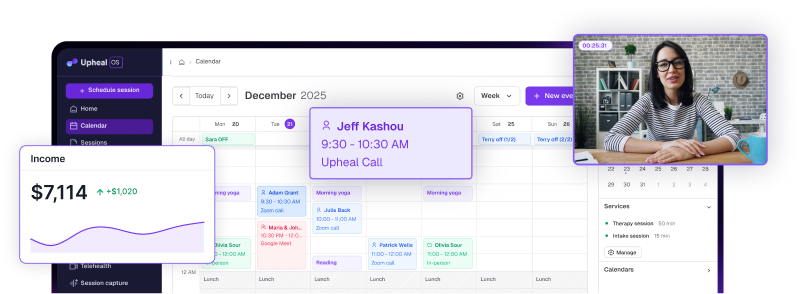

I've spent my career at the intersection of therapy and technology — first as a practicing clinician, now as Head of Clinical Operations at Upheal, where I help build AI tools that reduce administrative burdens for therapists. I believe deeply in AI's potential to enhance clinical practice.

I've witnessed how thoughtfully designed technology can give clinicians precious time back, help them notice patterns they might have missed, and create space for deeper therapeutic work.

But I've also seen the gray areas where AI raises more questions than it answers.

Here's what keeps me awake: there’s still a gap between what most AI companies promise and what the technology can actually deliver safely. And when I say there’s a gap, I’m not talking about user experience — I’m talking about the fundamental nature of healing.

The tensions we can't ignore

Three core tensions define this moment:

- Support vs. substitution

When does a helpful tool cross the line into replacing the irreplaceable human element of therapeutic connection? - Efficiency vs. ethics

How do we balance urgent demands for accessible care with professional oversight, safety protocols, and clinical judgment? - Accessibility vs. quality

In mental health deserts where clients wait months for care, is "good enough" AI better than nothing at all?

These aren't abstract philosophical puzzles. They're playing out right now in app stores, boardrooms, and therapy offices across the country.

A scenario that makes it real

Picture this: A mental health app available 24/7, using evidence-based CBT and ACT techniques, delivering reflective prompts and goal-setting exercises.

To users, it looks and feels unmistakably like therapy.

But there's no intake process. No diagnosis. No human clinician. No oversight whatsoever.

It's marketed carefully as "support," but the user experience tells a different story. The interface uses therapeutic language, the interactions follow therapeutic frameworks, and users develop what feels like a therapeutic relationship.

Now, let’s ask the uncomfortable question: Is that ethical?

The marketing department calls it "wellness support." The user calls it "my therapist." The technology operates in the space between those two realities, and that's where things get dangerous.

The ethical dilemma we avoid discussing

Let's raise the stakes.

Imagine your client lives in a mental health desert — few available clinicians, six-month waitlists for basic care. Their only option is that same unregulated chatbot app. It's imperfect, unsupervised, potentially risky. But it's something.

Do you recommend it?

Or do you counsel them to wait, knowing that might mean no help at all?

I've posed this question to rooms full of clinicians, and the response is always split. Some argue that imperfect support beats no support. Others insist that safety and accountability cannot be compromised, regardless of access challenges.

The discomfort in both camps reveals something crucial. That hesitation — that unwillingness to enthusiastically embrace either choice — points directly to what's missing in current AI tools.

What trustworthy AI actually requires

If we want AI in mental health to be ethical, safe, and genuinely useful, we need to establish non-negotiable guardrails:

- Radical transparency about what the tool can and cannot do. Users deserve to understand exactly when they're interacting with AI versus human clinicians.

- Clear clinical oversight. No AI tool should operate in therapeutic contexts without meaningful human supervision and accountability structures.

- Robust crisis safeguards. When someone is in acute distress, the technology must seamlessly connect them to qualified human intervention.

- Respect for therapeutic relationships. AI should enhance rather than replace the relational foundation that makes healing possible.

Today, the most trustworthy AI platforms for therapists recognize the limits of this this new technology. AI ultimately performs strongest in supportive roles, not replacement roles. The technology excels at pattern recognition, data analysis, and administrative tasks, but struggles with clinical judgment, relational attunement, and crisis intervention.

The hybrid model: AI as co-pilot

Here's where I think we land: AI doesn't need to be the therapist to be transformative.

It can be the co-pilot; a sophisticated assistant that handles what technology does well, freeing clinicians to focus on what humans do irreplaceably well.

Tasks AI can handle confidently

There are obvious, needful opportunities where AI can support clinicians with their admin. And, there are a few very specific ways care itself can be augmented.

- Session documentation

- Diagnostic suggestion

- Progress tracking

- Identifying language patterns

- Scheduling

- Billing

- Surfacing relevant treatment resources

Tasks that must remain human:

- Making or confirming diagnoses

- Responding to crises

- Providing relational attunement

- Holding space for a client's pain

- Exercising clinical judgment in complex situations

The key is embedding our core professional values — safety, accountability, and the primacy of therapeutic relationship — into every AI tool we design and deploy.

The path we're actually walking

The AI therapist debate isn't really about whether technology is inherently good or problematic.

It's about whether we're willing to shape that technology around the principles that make therapy effective and healing possible.

If we, as therapists, don't define those boundaries now, the tools and those who are non clinical will define them for us. Market pressures, venture capital timelines, and user acquisition metrics will drive development instead of clinical wisdom ethical considerations, and outcomes.

Once that happens, course correction becomes exponentially harder.

The companies building these tools aren't inherently malicious. Many genuinely want to expand access to mental health support. But good intentions without clinical grounding and ethical frameworks create precisely the kind of well-meaning harm we're starting to witness.

Where we go from here

The question "Should AI ever be the therapist?" misses the more important question: "How do we ensure AI serves therapy rather than replacing it?"

My answer: AI should never be the therapist, but it can and should be an incredible partner to one.

This isn't about being anti-technology or protecting professional territory. It's about recognizing that the most sophisticated aspects of therapeutic healing — emotional attunement, clinical intuition, relational safety, and crisis intervention — remain fundamentally human capacities.

The future of AI in mental health isn't about choosing between human clinicians and technological tools. It's about thoughtfully integrating both in ways that amplify what each does best.

But that future requires us to act now. We need clinicians actively shaping AI development, not just reacting to it. We need ethical frameworks built into technology from the ground up, not retrofitted after problems emerge.

Most importantly, we need honest conversations about what we're willing to automate and what must remain irreplaceably human.

The tools are evolving rapidly. Our professional standards and ethical frameworks must evolve just as quickly, or we'll find ourselves trying to catch up to technologies that have already reshaped our field in ways we never consciously chose.

The question isn't whether AI will transform mental health care. It's whether we'll guide that transformation or simply witness it.