About Upheal: Your most pressing AI questions, answered

We’re training our AI to assist you

Many of you asked us the same questions when you considered using Upheal for the very first time. We’ve addressed them in one AI-focused article for you!

Let us preface this by saying that using AI in mental health creates opportunities to support overworked mental health professionals, whose task is to help us handle the challenges of being human.

However, we know from our own experiences with therapy that the human connection is sacred and irreplaceable, especially for doing deep healing work. Our mission has always been to free up therapists from administrative tasks and help them find better balance – not create an AI therapy robot.

In service of that, we train our AI to help with clinical documentation and aspects of the therapists’ administrative tasks.

What’s actually on our roadmap

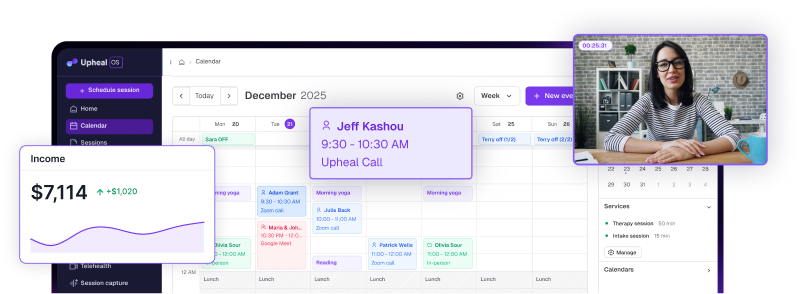

Our roadmap is focused on 1) reducing and automating administrative and manual tasks to save therapists time, 2) aiding therapists in enhancing client outcomes such as through advanced session analytics, and 3) providing new revenue opportunities for therapists.

After all, mental health professionals care deeply for their clients and their well-being by trade and by nature. And by extension, so do we. We believe that together we can manage this evolving field and its implications for therapy. With your help, we can develop AI tools safely, ethically, and responsibly.

Why train AI at all?

For us, the task is to create high-quality automated notes and collate client data in a way that helps the therapist do as little administrative labor as possible. In case you’re picturing something nefarious here are a few examples of what we do to put your mind at ease.

Use case 1 – Transcription and voice recognition

We need to capture sessions accurately. When the therapist uploads or records audio of a session, we don’t know whose voice is whose, so we have a model for identifying the therapist's and the client’s voices.

Use case 2 – Creating clinical documentation

If clients permit us, we work with session data to evaluate the quality and accuracy of our notes, to test what different AI prompts do with the session content, and run experiments that deepen our note quality and intervention creation. We also automate things like time-stamping and date-stamping, in order to create insurance-ready audit proof notes.

Use case 3 – Medication recognition

Especially for our psychiatrist audience, we have a model for identifying commonly misspelled drug names during transcription. We fix these automatically so they can be added to notes in their correct form.

Use case 4 – Name recognition

To de-identify transcripts and make sure that all personal information is removed, we must successfully identify this data first. We replace all mentions of people, locations, and place names thanks to a model trained to recognize these.

AI in mental health has huge potential. It can make tedious manual labor effortless, by collating previous client medical information, capturing intake assessments, or drafting therapy notes. It can also help with the necessary supervision mental health professionals must undergo, plus the initial training of psychology and psychiatry graduates.

For the client, it can offer additional support in the form of timely chatbot intervention, should they need help outside of therapy sessions in a way that complements the healing being done with their mental health professional, including building their psychoeducation and mental health skills. And, finally, it can also suggest recommendations to clinicians during a session, as sensed by AI analytics based on previous client and session patterns. (More on that below.)

Why it’s better to use domain-specific data

You probably already know that AI can be taught to recognize patterns. The core of our AI capability relies on Large Language Models or LLM—an AI model capable of understanding text by analyzing relationships between words, phrases, and sentences—and on writing text by predicting the best next word in a sentence.

The model is trained on large amounts of text data, and the more data it gets, the better the model is at understanding and generating text. This is what makes our notes so good. (Well, that and a clinical team of mental health experts devoted to the responsible planning, curating, and validating of our note content.) In short, the insights that the model generates from therapy sessions are based on our configuration, instructions, and training data. By the way: none of these can leave Upheal’s environment or be leaked to the public.

Unlike solely web-based open large language models which are not domain-specific, the data we use is always relevant and accurate. Since our AI is trained on data specific to a particular area, i.e. real therapy sessions, it can create a much more useful, insightful understanding of important patterns.

Why is this so exciting? Well, it can, for example, learn to recognize and remember cognitive distortions over time, honing in on the particular depressive patterns of a client by looking at their language. (For example, “always” and “never” words (black-or-white thinking), the future tense, or persistent “what if” clauses, (catastrophizing), and other features.) Using Upheal, the AI could even summarize and auto-suggest interventions, such as: “Client displayed 4 cognitive distortions, reported a headache, and a lack of motivation. Try CBT?” The AI can help make additional observations and suggestions that can be tracked and measured over time, shared with the client or supervisors, and evaluated for progress.

An AI therapy platform that “learns” using therapy language, patterns, and behaviors makes for a much more accurate and effective tool than a generic foundational model trained on open web data. This also means we don’t have unhelpful information clouding the picture. ChatGPT, for example, can “scan” through other general information, while our AI is based on session data. This could create a natural bias, however, since clinicians should always evaluate and edit our pre-drafted notes, this could simply be a part of the reviewing process.

What about bias and representativeness?

Bias would exist even on a bigger scale simply because there are always gender, class, culture, and other factors at play – and creating representativeness is a part of the key discussions taking place at higher levels when talking about how to implement AI in mental health fields (the APA mentions it here). Although we know using AI can help with a lack of mental health professionals, accessibility to mental health services, affordability, and flexibility of care, we do have to keep examining any culture and power dynamics at play.

How we use and interpret AI data is important

In short, the AI can act as another pair of eyes offering insights to deepen therapists’ healing efforts and aid clinical observations and progress. It is not responsible nor wise to use AI without human expertise. Our team is comprised of mental health professionals that are involved in creating and informing the models to know what to detect and how that translates into a clinical observation, noteworthy documentation, or other insightful interpretation.

AI assistance can also help by providing affordable mental health resources and psycho-education, reducing blocks created by stigma, opening access to complementary practices and practitioners, and creating personalized treatment plans that complement traditional talking therapies. As our co-founder Juraj wrote recently, we’re here to supplement and support mental health professionals:

“We aim to augment the therapist's role, making their work more efficient and impactful. Rest assured, that our mission is NOT to replace therapists with AI. We firmly believe in the irreplaceable value of human therapists in delivering effective therapy.”

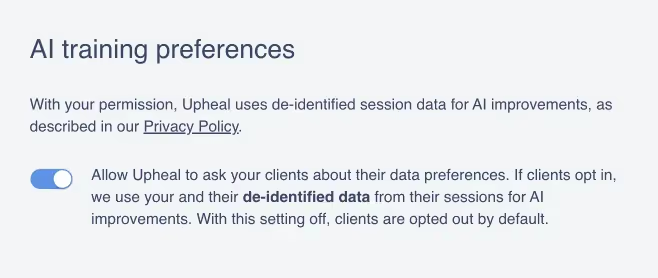

We ask for opt-in – a step others don’t take

From the examples above, you can see how using AI trained on sessions might make for a great additional resource, and better client outcomes. However, we want to be respectful of ethical beliefs and privacy rights.

We always ask therapy clients if they’d like to share their de-identified session data, making them aware of the choice on purpose (which some competitors do not). Moreover, data sharing is certainly not a prerequisite for using the Upheal platform. We aim to provide a helpful, valuable tool for mental health professionals, not gain access to personal session data.

We’ve even made our language more transparent about the fact that it will help improve aspects of our AI. A client doesn’t have to agree with this and can opt out of allowing their data to be used for finetuning Upheal’s AI. They are also allowed to change their minds at any time, by simply letting us know they withdraw their consent.

In addition, if a client does agree to data sharing, as per our Privacy Policy, we keep their de-identified transcript data for 1 year and de-identified datasets such as any insights, clinical notes, and so on, for 5 years. However, this shouldn’t be confused with psychotherapy records data; we are fully HIPAA compliant and follow all necessary regulations according to state and region.

Furthermore, clients can request deletion at any point, or if they signed up on the terms of our previous agreement. (Previously, we did not distinguish between transcript and derived datasets. However, these were kept for 6 months only.) For us, it is important to be actively involved in and in control of the development of this field together with mental health professionals.

You don’t even need to use our AI

We also support simple video calls that don’t include AI notes and insights. In addition, the therapist can choose to pause Upheal’s AI during the discussion of sensitive topics in a session that the client doesn’t want to be captured. Or, they can pause it for entire sessions at a time. This stops transcription, and the topic won’t appear in notes or insights. That said, pre-drafted progress notes, summaries, and helpful analytics cannot be created since there isn’t any source data. However, it does give therapists the freedom to allow their clients to have various AI preferences and still use Upheal.

Ensuring HIPAA compliance, privacy, and security

If a client opts in, any shared session data is de-identified – in other words, it’s stripped of names and other information that can give clues to a person’s identity. These are speech-based metrics, language, behaviors, observations, and patterns, that aren’t traceable back to any one individual and only ever used after they’ve been de-identified. This is where we go beyond HIPAA requirements. Once data has been de-identified, it can — by HIPAA standards — be used for training purposes. At Upheal, however, we've decided to hold ourselves to a higher ethical standard, writing our privacy policy so that we may not use client data (even de-identified) unless they've explicitly opted in.

Could AI even replace a human therapist?

We’re a mental health platform that supports mental health professionals, not an advocate for AI therapy robots – but even if it’s not us, could someone one day train the AI this well? It’s a topic that we should respect enough to explore, especially if we’re contributing to it. The answer is that we don’t think so – at least not for any deep healing work.

Here is the opinion of our team:

“We believe that the psychiatrist, therapist, coach, or social worker using AI is likely to be better in their role thanks to its help in 5 years compared to without it. Furthermore, this certainly doesn’t mean that the AI will be doing the job instead; rather it will be assisting in ways a supercharged tool can.”

We hope this article has been helpful. You don’t have to share client data on the Upheal platform. We want everyone to be able to choose how they wish to use Upheal and regulate their involvement in the development of AI. If you have more data or privacy-related questions, please peruse our Support center, drop us an email, or speak to our DPO (Data Protection Officer) at upheal-dpo@chino.io. Thank you for reading! Please feel free to submit blog article topics to us.