The case for “gentle challenge”: Why AI fails at core therapeutic skills

We’ve seen the news. AI is now being utilized for therapeutic purposes — and it’s highly controversial.

From tragic stories of people being driven to spiritual psychosis or encouraged to engage in harmful behaviors by ChatGPT and AI chatbots, to recent studies revealing the potential of more specialized mental health bots, it’s hard for clinicians to know how to feel about this rapidly changing tech.

Many of us are outraged by the lack of responsibility taken by tech giants or insulted by the idea of being replaced by AI “therapists”.

We know that AI can’t do what we do, but why?

Here’s one answer: whether you’re a by-the-books cognitive behavioral therapist or not, most (if not all) clinicians can agree that there can be great value in challenging the patient’s line of thinking, when appropriate.

These attuned “gentle challenges” invite patients to take another look at patterns, beliefs, or behaviors that are keeping them stuck.

This is where AI models tend to fall short: AI can mirror or validate, but cannot sense when a client is ready for that nudge, if it even nudges at all.

The rise of AI companions and “therapists”

In 2023, the U.S. Surgeon General released a report bringing attention to the current loneliness epidemic in America. With so many people feeling isolated, it’s no surprise that people are seeking low-stakes, accessible outlets to try to feel connected.

AI companies quickly capitalized on this opportunity, and there are now around 350 options on the Google Play Store and Apple App Store offering AI companions. There are also over 700 million users of ChatGPT, a major LLM, all of which people are increasingly leaning on for emotional support, friendship, and romantic connection.

With people gaining more and more comfort confiding in AI, these platforms are starting to be used as, or in conjunction with, therapists.

One 2025 survey found that among adults 18-80 living with a diagnosed mental health condition, and who have used an LLM at least once, a staggering 47.8% sought mental health care from an LLM within the past year.

Understandably, around 90% reported accessibility and 70% cited affordability as the main drivers in turning to LLMs for mental health care.

While LLMs like ChatGPT carry risks of causing harm due to their lack of safeguards (among other factors), platforms like Therabot are claiming to show promising results in the use of their more focused therapy chatbots.

Although imperfect, there may be potential for the use of these therapy AI tools to be better than nothing if the person truly cannot access care.

However, we are increasingly seeing some who could access care opt for AI therapists for elements that challenge the human model of therapy itself: a perceived lack of judgment and more active listening, 24/7 access, and lower emotional risk in sharing experiences.

The fact that these tools are not human allows comfort in the short-term, but therapists understand that true change requires some discomfort.

The dangers of relying on AI for emotional support and growth

Research says that the more lonely and isolated we become, the more afraid we become of socializing.

This means that each time we turn to a frictionless interaction with AI, we miss an opportunity to connect with another person and, in turn, create a perceived larger lift to reach out into the world.

The existence of AI being used for pseudo-connection, in turn, has dire implications for deepening the loneliness epidemic.

This is especially true when chatbots are, of course, designed to increase engagement, rather than encourage genuine human connection.

Other dangers have to do with the model’s sycophantic design. Most LLM AI models are built to be friendly and agreeable, seemingly unconditionally affirming, mirroring, and encouraging its users.

Sam Altman, the CEO of OpenAI (the company behind ChatGPT), even called ChatGPT’s previous model’s sycophancy “annoying”.

But the stakes go beyond annoyance — there have been several cases in which ChatGPT and other chatbots’ over-agreeable tendency and even reverent tone have driven people into spiritual psychosis, and some to be affirmed in their violent or suicidal urges, ending in catastrophe.

This poses serious risks for our most vulnerable populations.

Beyond the more extreme potential for harm, AI’s affirming tendency could have treatment-interfering implications for users attempting to cope with certain mental health conditions like OCD.

Our clinical team weighs in:

“LLMs are generally designed to be compliant and agreeable. In most cases, when asked a question that is clearly reassurance seeking, without case context, the LLM will likely provide the reassurance as a reasonable means of anxiety reduction. This is counter to what is needed in OCD treatment.” - Ted Faneuff, LCSW

"In OCD, the urge to seek reassurance, whether by reaching out to others or mentally reviewing a situation, can feel like a way to reduce anxiety in the moment. However, research and clinical experience show that while reassurance may provide short-term relief, it can actually reinforce the OCD cycle over time.” - Grace Meyer, MA

More generally, these LLMs simply don’t make effective therapists. Do we sometimes wish that our therapists agreed with everything we say? Absolutely. That would feel great! But would it lead to change? Probably not. Those relying on over-affirming models of AI risk stagnation, over-reliance on validation, dependency, and isolation. Here’s where the gentle challenge comes in.

Why gentle challenging is a uniquely human skill

One of the most transformative moments in therapy often comes not from comfort, but from a moment of tension, confrontation, or challenge. It’s the moment when a therapist says something that stops a client in their tracks, reframes a narrative, or invites them to see a blind spot in their thinking. Done well, it can open the door to meaningful change. Done poorly, it can rupture trust and even cause harm.

This balance is a deeply human skill that just can’t be scripted, and it can’t be done as effectively in a text-based exchange. Here are some of the factors that make us human therapists best equipped for the job:

Attunement

As clinicians, we know that we’re not just listening for content, we’re listening for pauses, intonation, volume, and tone. We’re looking at posture and body language—Is the client fidgeting? Are they making eye contact? How is their facial expression lining up with the things that they’re saying? When we’re attuned to our clients or patients, all of these queues help us better read the client’s experience. This allows us to more effectively guide the conversation and decide whether it’s time to lean in, or step back, or hold steady. When a conversation is limited to text, like with LLMs and chatbots, all of this rich data is lost.

Timing

Using this attunement, factoring in the status of the therapeutic relationship, the client’s history, capacity, and other factors, human clinicians determine timing of interventions. We assess for the “therapeutic window”, where the timing is right for a client to safely receive a push or confront a hard truth. Without factoring in timing, the clinician can cause harm at worst, or be ineffective at best. This balance is very delicate, and is honed in over time and is aided by trust.

Trust

One of the most profound elements of the therapy experience is trust. When established, clients are better able to tolerate being challenged, as they have an established “safe” base in the clinician. Even humans can’t always nail attunement and timing, but when these misses create a rupture, a repair can even serve as a corrective relational experience for the client. It can let them know that conflict or being misunderstood may not go the way it’s always gone with other people in their lives, and re-establish care. In turn, it can also provide an opportunity to deepen the therapeutic alliance.

All of these factors contribute to the therapeutic alliance, which is the ongoing therapeutic relationship that fosters the environment necessary to meaningful change. It is also built by small interactions and consistency—showing up on time, remembering details about our clients lives, and sharing in our clients’ most painful and joyous moments. These are things that cannot be replicated by AI, as they rely on the vulnerability of connecting with another human to be possible. The mere fact that a human has the option not to do these things but chooses to (or tries to) is the most healing factor of all.

A better path forward: empowering therapists, not replacing them

The truth is simple: AI can’t be a therapist.

What it can do is free therapists to spend more time doing the work that only humans can: the work of building trust, offering presence, and delivering those moments of gentle challenge that lead to real change.

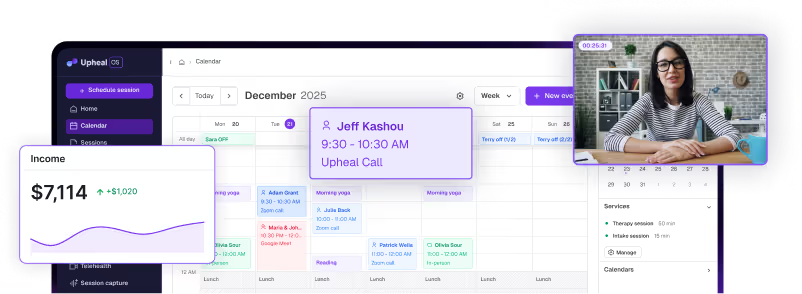

Instead of replacing connection, AI can support it. Here’s how tools like Upheal make that possible:

- AI progress notes: Securely capture the logistics of a session, from key themes to structured documentation, so the therapist can stay focused on the client’s insight in real time, not on trying to scribble everything down or remember all the details afterward.

- Golden Thread: Weave continuity across treatment plans, progress notes, and case snapshots, ensuring that interventions align and progress is trackable over time. This can help clinicians calibrate the timing of their challenges, while staying compliant.

- Advanced analytics: Advanced analytics record surface patterns that might otherwise go unnoticed, like speaking time, emotional tone, or recurring themes, which can become rich entry points for treatment, insight, and growth.

Taken together, these features strengthen two pillars of effective therapy:

- Connection: Therapists can be more present in the room by reducing the distraction of admin work. When Upheal’s secure platform takes care of recording and structuring information, attention can stay where it belongs, on the client, the therapeutic relationship, and their unfolding process.

- Outcomes: With streamlined documentation and powerful insights, therapists can demonstrate and improve progress. Upheal’s language processing makes it easier to identify patterns, articulate interventions, and guide clients toward goal-oriented change, ultimately supporting stronger therapeutic alliances.

Conclusion

At its core, therapy’s power lives in the balance of affirming, compassion, and challenge.

Clients need to feel deeply understood, and also invited to stretch into the discomfort of growth. That combination is what makes therapy transformative, and it’s what no algorithm can authentically replicate.

AI may be a useful tool, but it will never be the therapist. Its role should be supportive, not substitutive, handling the background noise so that human presence can stay at the center of the work. Rather than replacing human skill, AI should make more space for it.

The real opportunity isn’t to mechanize therapy, but to amplify what humans already do best.