The Therabot study: Can this AI chatbot really improve patients’ mental health?

With AI chatbots like ChatGPT, Claude, and Gemini, people have access to what feels like a therapist in their pocket.

But using a generic AI chatbot for mental health support can lead to disaster. These models can reinforce unhelpful thoughts, show bias, and give dangerous advice. They’re not designed with therapeutic care in mind.

So, what happens when an AI tool is built by experts specifically to deliver mental health treatment? That’s what the Therabot study set out to answer.

And, spoilers, while the study was promising, it ultimately found that AI isn’t ready to take on mental health care — even tools trained in therapeutic practices.

Let’s take a closer look.

How did the Therabot study work?

Researchers from Dartmouth College built a generative AI chatbot called Therabot. Unlike generic AI models, it was trained on psychotherapy and cognitive behavioral therapy (CBT) best practices.

A randomized controlled trial explored how Therabot performed in a therapeutic setting, and results were published in the NEJM AI, a journal by The New England Journal of Medicine.

The study included 210 adults with clinically significant symptoms of either:

- Major depressive disorder (MDD)

- Generalized anxiety disorder (GAD)

- Clinically high risk for feeding and eating disorders (CHR-FED)

Participants were split into two groups. One group interacted with Therabot for four weeks through a smartphone app. The other group received no treatment.

Just like other AI chatbots you might have used, participants interacted with Therabot through written prompts. It responded with conversational answers and open-ended questions, encouraging further conversation.

After four weeks of treatment, and then again at an eight-week follow-up, participants reported on changes in their symptoms. They also shared how they engaged with Therabot and whether they formed a therapeutic relationship with it.

What results did the Therabot study find?

Over four weeks of treatment, participants in the Therabot group used the chatbot for an average of six hours — equivalent to eight 45-minute therapy sessions.

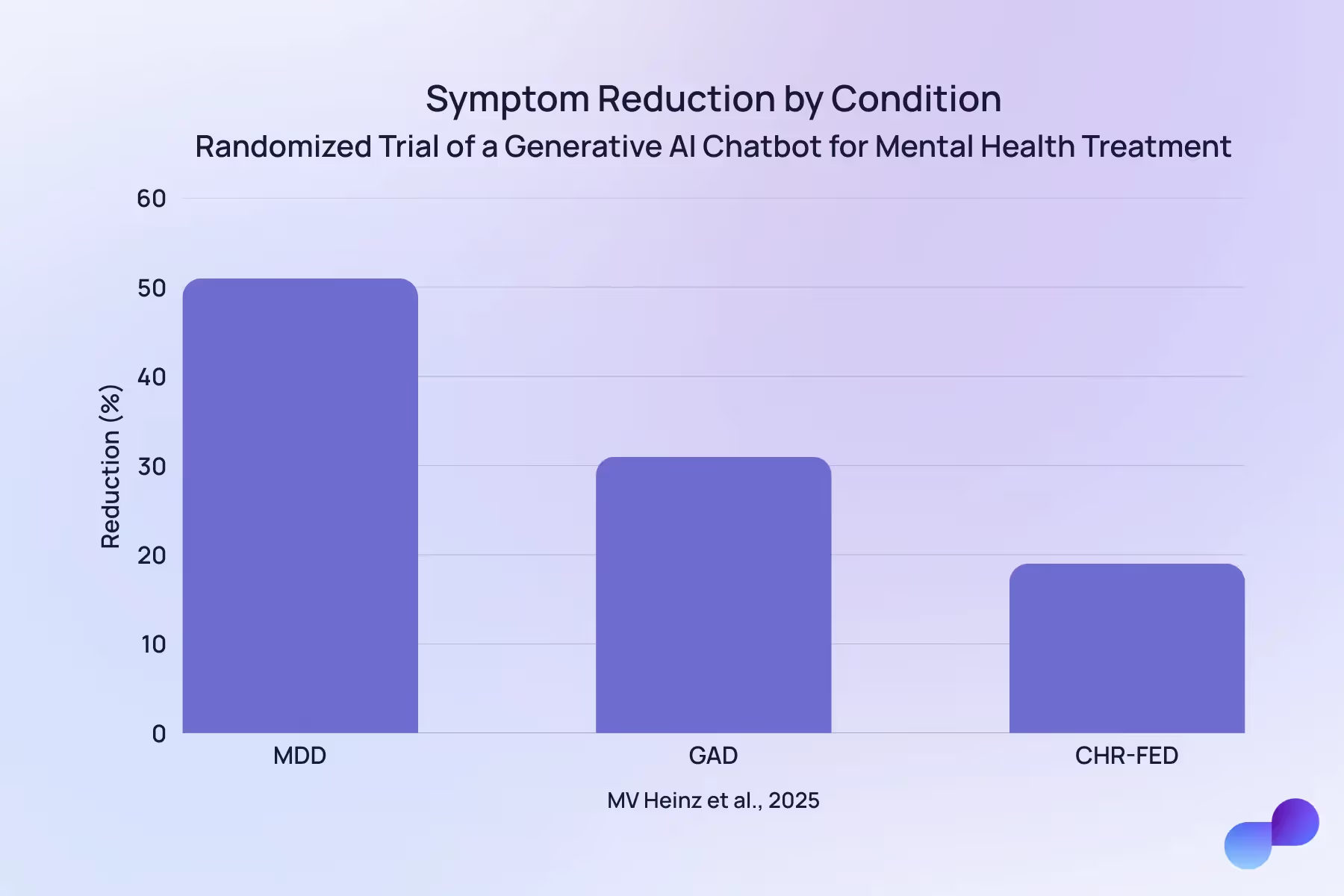

That led to some promising results. The Therabot group saw a greater reduction in symptoms compared to the control group.

At the eight-week follow-up, compared to their baseline levels:

- Participants with MDD had a 51% average reduction in symptoms

- Participants with GAD had a 31% average reduction in symptoms

- Participants at CHR-FED had a 19% average reduction in symptoms

Beyond the raw numbers, those with MDD reported improvements in their mood and overall well-being. And many participants with GAD went from having moderate anxiety to mild anxiety, or from having mild anxiety to being below the clinical threshold for a diagnosis.

Therabot users didn’t only feel better. They said they formed (what felt like) a therapeutic alliance with the chatbot that was comparable to that of a human therapist. (Of course, therapeutic alliance is tricky to measure, and highly subjective.)

This part surprised the Dartmouth researchers. “We did not expect that people would almost treat the software like a friend,” said senior author Nicholas Jacobson in an article from Dartmouth College. “It says to me that they were actually forming relationships with Therabot.”

It’s not clear why users bonded so well with the AI tool, but Jacobson has a theory. “My sense is that people also felt comfortable talking to a bot because it won’t judge them,” he said.

Are AI chatbots safe?

AI chatbots aren’t always safe for mental health use cases. Currently, generic AI chatbots don’t have many safety features built in — although companies like OpenAI are rolling more out.

The Therabot chatbot, however, had a few layers of safety.

Firstly, researchers monitored conversations to make sure Therabot delivered appropriate therapeutic responses.

Beyond this, if the AI model detected high-risk content — such as suicidal ideation — it would prompt the user to call 911 or contact a suicide prevention or crisis hotline through an onscreen button.

Efficacy, while obviously important, is just one aspect of how well AI therapy bots work. Safety is another major concern — as is privacy.

Other studies on AI as a therapist

There isn’t a ton of research on AI chatbots in therapy, but we’re starting to learn how non-therapeutic AI tools stack up against the real deal.

For example, a 2025 study compared responses from generic AI chatbots to responses from licensed therapists (the human kind).

Researchers created two fictional scenarios and studied three chatbots and 17 human therapists. The results showed that, compared to human therapists, AI chatbots more often used:

- Reassuring language

- Affirming language

- Psychoeducation

- Suggestions

On the flip side, human therapists used more self-disclosure and elaboration.

The study concluded that generic AI chatbots weren’t suitable for mental health conversations, especially for people in crisis. Some key issues included giving generic interventions and overusing directive advice without gathering further context.

Beyond these issues, there’s concern about the bias that generic AI models can have. One study found that AI chatbots can show stigma toward issues like alcohol dependency and schizophrenia. Something that, clearly, could be harmful to people seeking support for those issues.

So, do AI therapists work?

AI therapists show some promise.

As the Therabot study shows, when trained in mental health treatment, they could support people who don’t have access to human care, or those who may not reach out for it. And not just support them, but make a real difference to their symptoms.

AI therapy chatbots could also provide 24/7 support — something that came up in the Therabot study as some users initiated conversations in the middle of the night, a vulnerable time for mental health issues.

But we’re not quite there yet.

There’s still a long way to go

Researchers of the Therabot study themselves state that more research on AI therapy chatbots is needed with larger sample sizes. But the expert oversight that kept Therabot users safe would be much harder at scale.

There are a few other limitations with the study. For example, we don’t have enough research to know whether Therabot could help people for whom CBT isn’t the most suitable treatment modality. And we don’t know if it could help those in crisis or with severe anxiety, depression, or eating disorder symptoms.

And beyond those three conditions, we don’t know how helpful (or harmful) Therabot could be for people with other mental health challenges — like bipolar disorder, ADHD, or PTSD — or in situations that don’t fit neatly into a diagnosable condition — like personal growth, burnout, or divorce.

We do know that generic, non-therapeutic AI chatbots can lead to negative outcomes when people turn to them for mental health care. But chatbots trained in therapeutic practices can’t act as therapists just yet.

As Michael Heinz, the study’s first author, says, “While these results are very promising, no generative AI agent is ready to operate fully autonomously in mental health where there is a very wide range of high-risk scenarios it might encounter.”

Among the many reasons for this, like safety and privacy concerns, there's the fact that therapeutic healing is a human experience. Interpersonal neurobiology, for example, shows how our brains are impacted by our experiences, and especially by our relationships with others.

Human therapists can interact with clients with a level of empathy, connection, and real-world understanding that AI chatbots just don’t have.

Final thoughts: How AI can help patients and therapists now

AI therapy chatbots are still lacking. While early research, like the Therabot study, shows they have potential, it also highlights how these chatbots simply aren’t ready to deliver mental health care.

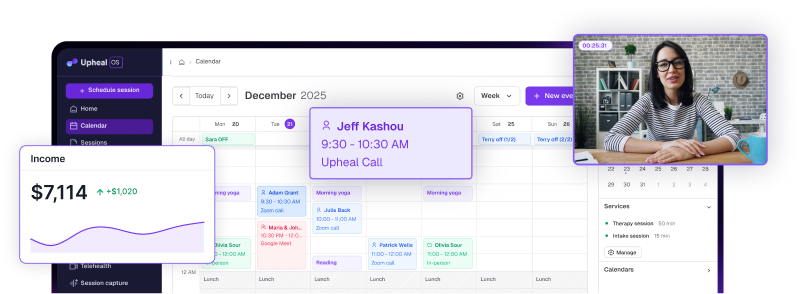

But AI does have a place in therapeutic settings now — and not by replacing human therapists, but making them better.

Upheal uses AI to streamline tasks like progress notes, saving clinicians hours each week. This alone can help to reduce the burnout and emotional exhaustion that’s so prevalent among therapists.

Just as importantly, Upheal frees you up to be more mentally present and attentive during your client sessions.

With an AI medical scribe, you’re not mentally drafting notes while listening to a client. You’re fully engaged, strengthening the human connection people are still looking for (and need) in therapy.

AI tools can’t provide human empathy and deep, real-world expertise. But they can free up human therapists to do exactly that.