Survey: How therapists are using AI

The conversation about AI in mental health has become a peculiar theater of extremes.

In one corner, we have the doomsday prophets warning that AI will replace human connection with algorithmic approximations of empathy.

In the other, techno-optimists promise that silicon saviors will democratize mental health care and solve the crisis of access overnight.

Both narratives miss something fundamental: mental health is a human experience.

Mental health care is a human system.

AI, too, is a system designed by humans to solve human problems.

When we talk about it like an invisible, spontaneous force, we opt out of the more important conversation: how will we humans contribute to building modern systems that authentically solve human problems?

While Silicon Valley debates whether AI will replace therapists, we asked over 500 practicing clinicians to weigh in on a better question: what problems can AI actually solve?

Therapists are struggling

You've just finished your seventh back-to-back session.

Your last client disclosed trauma that required every ounce of your emotional and cognitive bandwidth.

Now you have exactly four minutes (if you’re lucky!) before your next appointment.

In those four minutes, you need to document that complex session in a way that satisfies insurance requirements, maintains clinical integrity, and somehow captures the profound human exchange that just occurred. Or, you need to remember every detail, through all your next sessions, until you’re able to log back online that evening.

There’s a layer of this that is burnout, defined by pioneering researcher Christina Maslach as the emotional fallout from being stretched too thin for too long.

But the problem goes a layer deeper, too.

Workforce shortages and high-strain environments are plaguing clinicians, while time-consuming administrative responsibilities are limiting our availability for actual care.

Empathy — the necessary cornerstone of human care — requires time and space. But, we’re so buried in paperwork, that we lack the very conditions that make authentic empathy possible.

These problems are not individual failures. And this is not simply the emotional fallout of disenchanted providers.

These are structural wounds.

This is moral injury.

Moral injury happens when you know what good care looks like, but the system doesn’t allow you to provide it. It’s a wound that comes not just from stress, but from being asked to act in ways that conflict with your values.

Our conversation must shift — away from blaming or replacing clinicians.

We need to talk about real, systemic solutions that allow therapists to do the work they are meant to do.

Is AI even helpful?

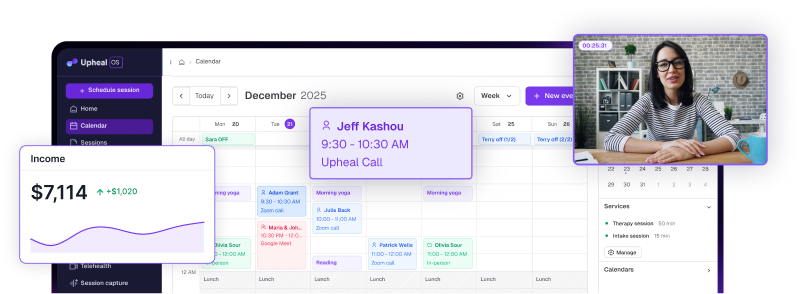

After implementing Upheal in my own practice and witnessing its impact, I wanted to know: was this actually helping address the systemic challenges we face? Or was it just another layer of technological complexity?

So we asked.

We surveyed 583 clinicians who use AI-powered clinical tools in their practices, focusing on four key dimensions:

- therapeutic presence

- burnout

- cognitive load

- clinical engagement

We used a standard 5-point Likert scale and included an open-ended question about observed changes.

The responses surprised even me.

How therapists are using AI

The administrative burden therapists face is real, urgent, and solvable. And AI can help. We just need to focus on the real problem at hand.

The 583 clinicians we surveyed aren't using AI to conduct therapy. They're using it to handle everything that gets in the way of therapy.

AI medical scribes

Consider the ambient scribe — essentially a digital documentation assistant that captures session content while the therapist maintains full presence with their client.

With these tools, therapists are harnessing sophisticated natural language processing that can…

- distinguish therapeutic interventions from casual conversation

- identify evidence-based techniques as they're applied, and

- structure this information into clinically sound documentation.

Reading Upheal notes makes me feel like I have had a skilled, confidential witness to the session. It sometimes provides new insights or notices details that I missed.

The technology doesn't just capture what happened — it helps clinicians see patterns they might have missed while managing the cognitive load of simultaneous documentation and therapeutic engagement.

I'm actually listening to my clients now instead of mentally drafting my notes while they talk.

Treatment plans

In clinical training, we learn about the Golden Thread — the continuous narrative line that should connect every piece of documentation from intake through discharge, demonstrating medical necessity and treatment progress at each step.

It's the gold standard of clinical documentation.

It's also difficult to maintain in real practice.

Why? Because creating that coherent narrative requires time and cognitive resources that the current system doesn't provide.

You'd need to review past notes before each session, craft new notes that explicitly reference treatment goals, and regularly update treatment plans to reflect evolving clinical pictures.

When you're seeing 25-30 clients per week, each with their own complex narrative, maintaining those golden threads becomes a Sisyphean task.

AI tools like Upheal can analyze intake sessions to create case snapshots that become the foundation for treatment plans, automatically resurfacing relevant goals and interventions in each subsequent progress note.

I don't have to worry about how I will summarize ALL of this — I get to choose what I will communicate to insurance but Upheal has it all written out for me so I can literally cut out what I don't want to say and keep what I wish to say in order to justify medical necessity.

The narrative coherence that insurers demand — and that actually does improve clinical care — becomes achievable without sacrificing session time or evening hours.

Compliance

Let’s talk about the invisible tax on clinical practice: compliance.

Every therapist, regardless of payment model, navigates a labyrinth of documentation requirements set forth by state licensing boards, professional ethics codes, and legal standards.

Even cash-pay practices must maintain records that could withstand scrutiny from licensing boards, satisfy HIPAA requirements, and meet professional standards for clinical documentation.

But here's what often goes unsaid: even clinicians who've opted for cash-only practices to escape insurance bureaucracy still carry significant compliance burden.

They must document to professional standards.

They need records that protect both client and clinician.

They require documentation that demonstrates ethical, evidence-based practice — not for insurers, but for their own professional integrity and legal protection.

The result is that compliance requirements, originally designed to ensure quality care, have become a barrier to providing it — regardless of payment model.

Now, therapists are using AI to check their documentation against established criteria, automatically confirming compliance with relevant standards and flagging potential issues before they become problems.

"My stress level is so much lower... I never have to worry my documentation isn't up to par."

AI technology can finally equip clinicians to maintain documentation that meets professional standards, without sacrificing the time and mental energy needed for actual clinical work.

What clinicians had to say

The responses and open-ended responses that hundred of clinicians provided to our survey offered a lot of color on ways AI can actually solve real problems in behavioral health care.

🫴 Deepening, not replacing, connection

That first finding — 86.7% reporting increased presence — matters more than you might think.

Decades of psychotherapy research consistently show that the therapeutic alliance is one of the strongest predictors of positive outcomes, regardless of treatment modality.

Having systems that actually support the way I function doesn't just help me stay on track; it also backs up the message I share in session. It's proof that what we talk about really works.

When therapists can be fully present rather than mentally multitasking, the quality of that alliance deepens.

My clients have noticed it as well, I'm very satisfied with the product... I feel lighter during session, more present.

The irony isn't lost on me — an AI tool made therapists more human in their sessions.

❤️🩹 Meaningfully relieving provider burnout

How many webinars about "self-care" have you sat through where some well-meaning administrator suggests you try meditation or yoga to manage your burnout?

As if the solution to systemic dysfunction is individual mindfulness.

You can’t box breathe your way through impossible caseloads and documentation demands.

When using AI, 82.4% of clinicians stated that they feel AI had a hand in reducing their burnout.

My burnout is still bad since I'm working at a public mental health agency, but using this has helped. I have ADHD and the task avoidance was strong when it came to my notes. Upheal has helped so much with that. I don't dread doing notes anymore.

That doesn’t come from meditating more. That comes from protecting cognitive margin. Or rather, they were spending less mental and emotional energy on documentation.

More relaxed, more present, more engaged. I have felt happier and now look forward to doing therapy since I don't have to worry as much about documentation.

This isn't about working smarter, not harder. It's about recognizing that some work shouldn't be hard in the first place.

⚡️ Automating the work that should be automated

Let me paint you another picture of the peculiar cruelties of American behavioral health care.

You provide a life-saving intervention for someone experiencing suicidal ideation.

Six months later, you receive a clawback notice because your documentation didn't include the specific phrase "safety plan reviewed and updated" even though you spent twenty minutes doing exactly that.

Or this:

You accept a client's insurance, which reimburses you $73 for a session.

The billing platform takes 3%.

The EHR charges a monthly fee.

The clearinghouse wants their cut.

By the time everyone's had their piece, you're left waiting to be paid for for highly skilled, emotionally demanding work.

These aren't edge cases — they're Tuesday.

The 91.2% of clinicians reporting reduced mental effort weren't just saving time. They were reclaiming cognitive resources hijacked by administrative absurdity.

Documentation is significantly less stressful and I'm more confident in the quality of it and the ability to pass audits.

This is the work that should be automated: the translation of human conversation into insurance-speak, the checking and double-checking of compliance boxes, the reformatting of clinical insights into billable codes.

My stress level is so much lower, it is hard to compare. Even when I fall a bit behind in my documentation, I have no real stress about it."

🧠 Harnessing human expertise

The fourth finding — 85.8% reporting increased engagement and reflection — points to something profound about the role of human expertise in an AI-augmented practice.

When administrative burden lifts, something interesting happens.

Clinicians don't just do the same work with less stress.

They do better work.

I have been able to incorporate activities and do more research for my clients and deliver more psychoeducational information.

They read research again.

They pursue continuing education.

They take on complex cases they previously would have referred out.

They do things like… you know… go to the bathroom and recharge…

With the decrease in daily stress related to documentation, I feel more capable. I feel awesome being able to put more energy into research and learning.

This matters particularly for neurodivergent clinicians, who often bring unique competencies their clients desperately need but who also face additional challenges with administrative tasks.

Things feel more balanced and easeful — especially as a therapist with ADHD working with clients who relate.

The technology isn't replacing human expertise—it's creating space for it to flourish.

Of the 583 clinicians we surveyed, only 17 felt less engaged. These stark numbers reinforce that AI can help clinicians deliver the care they were meant to provide.

AI should support, not replace, clinicians

Let's be clear: AI is nowhere near sufficient for the complex, nuanced, and critical application of mental health care delivery.

The therapeutic relationship — with all its subtleties, contradictions, and profoundly human moments — cannot be algorithmatized.

But at a systemic level, we have massive problems around unmet human needs that can and should be addressed with more sophisticated technological support.

The question isn't whether AI belongs in mental health care.

It's how we ensure it serves the right purpose.

The hundreds clinicians who shared their experiences represent a diversity of theoretical orientations, practice settings, and clinical populations.

Some work in private practice, others in community mental health.

Some are newly licensed, others have decades of experience.

Yet their responses revealed remarkable consensus: when AI handles administrative burden, human connection deepens.

There are three things all the clinicians we surveyed have in common:

They care about their clients.

They deserve support.

They use Upheal.

I literally feel like using Upheal has been life changing. I have a way better work-life balance, am more present for my kids, feel less anxiety after hours about having a pile of admin waiting for me.

If you're drowning in documentation, dreading your admin tasks, or wondering if there's a better way to maintain your practice without sacrificing your presence, you're not alone. And you don't have to continue carrying this weight alone.

Upheal offers a free 14-day trial — enough time to experience what practice feels like when technology serves its proper role: amplifying your human expertise rather than replacing it.

Because ultimately, the future of mental health care isn't about choosing between humans and machines.

It's about human clinicians defining the systems will really help. That will let humans do what only humans can do — connect, understand, and heal.