Integrating AI into therapy – an academic review

More and more, AI is being woven into various aspects of modern life.

The idea of integrating AI into therapy has been a divisive topic, but rather than endlessly discussing potential fears or exaggerating unknown outcomes, we wanted to take an honest look at what the research shows.

We’ve been waiting for it!

The purpose of this article is to describe the various uses of AI in therapy at the present moment and summarize the current findings from academic research regarding the effectiveness of implementing AI in therapeutic practice.

In this article, we’ll outline the current applications of AI in the therapy space before going into a comparison of the possible benefits and limitations. And of course, we’d love to hear from you about the current findings.

How is AI being used in therapy?

Looking at the research, we’re integrating AI into therapy in 6 main ways, and it’s everything from chatbot support (e.g., Wysa, Woebot) to training therapists as well as detecting client risk.

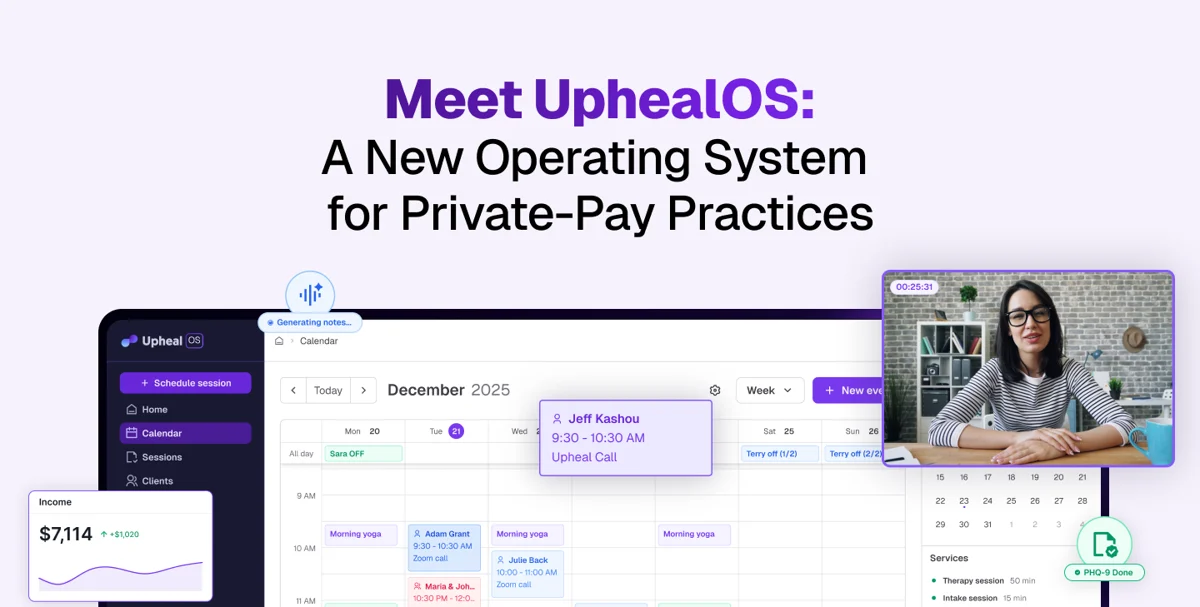

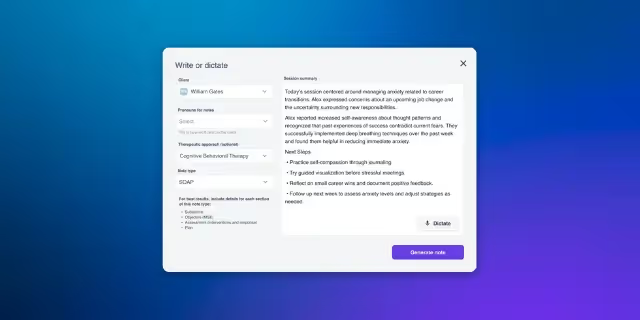

Therapists are also using AI tools to supplement their daily practice; for example, they may use AI to conduct sentiment analysis of client sessions, monitor session-to-session progress, and write notes using AI scribe products like Upheal.

AI-based predictive models have also been developed to detect suicide risk and substance use treatment outcomes, though their use in routine clinical practice remains limited.

Let’s look at each of these in more detail.

Using AI as chatbots

Most academic literature on integrating AI into psychotherapy has focused on the effectiveness of AI chatbots in reducing certain mental health symptoms. Two reviews conducted by Casu et al. 2024 and Lau et al. 2025 found chatbots demonstrate promise in reducing symptoms of depression, anxiety, substance use, and disordered eating behaviors. They have also been explored as preventive interventions for conditions like eating disorders and as supportive tools for individuals with chronic illnesses like Parkinson’s disease and migraines.

Results from several recently conducted randomized controlled trials (RCTs) corroborate findings from Casu and Lau’s reviews. For example, an RCT comparing Therabot (an AI chatbot) vs. a waitlist control group published in the New England Journal of Medicine AI (Heinz et al., 2025) found that participants in the Therabot group showed reduced symptoms of depression, anxiety, and eating disorders relative to the waitlist group. Similarly, an RCT conducted with college students in China comparing a chatbot called XiaoNan with bibliotherapy found that the chatbot group showed reduced depression and anxiety (Liu et al., 2022).

To date, there has only been one study comparing an AI chatbot vs. traditional, in-person therapy, which found traditional therapy to be more effective, but that the AI chatbot was more easily accessible to participants in crises (Spytska, 2025).

Assessing the therapeutic alliance and therapy progress

Despite being an important predictor of client outcomes, regular use of alliance self-report measures has failed to gain widespread implementation.

Scientists have begun to study whether AI can track the therapeutic alliance between therapist and client (Aafjes-Van Doorn et al., 2025; Goldberg et al., 2020), which has already proven to be a particularly important area of study given that therapeutic alliance is a strong predictor of clinical effectiveness (Baier et al., 2020).

Research indicates that AI could enable real-time detection of declines in therapeutic alliance from text, audio, or video and inform timely interventions as needed.

Scientists have also begun to use AI to monitor changes in client outcomes over the course of therapy (Meier, 2025). As progress monitoring is linked to improved therapy outcomes (Newnham et al., 2015), AI-enabled progress tracking may enhance therapeutic effectiveness.

Training and evaluating therapists

AI appears to be useful in training and evaluating therapists as well. In fact, one study found that a pre-trained AI supervisor generated by Chat-GPT 4, compared to both a non-trained AI supervisor and a qualified human supervisor, was the most effective in providing clinical feedback to a therapist trainee (Cioffi et al., 2025) according to trainee ratings on the quality of feedback provided by the supervisor. More specifically, trainees perceived the AI-trained supervisor as most skilled at incorporating empathy and supportiveness into its feedback, and as providing feedback that was most beneficial to their professional development.

Relatedly, another study found AI-trained models were useful in providing clinicians feedback on their ability to create a collaborative relationship with clients. The study authors highlighted the potential of AI to deliver more targeted and effective training resources for clinicians in the future. (Doorn et al., 2025).

These findings suggest further integration of AI into supervision may enhance clinical training. For example, tools like Upheal that can provide line-by-line transcripts of session content can help supervisors provide trainees with targeted and specific feedback on examples of (in)effective techniques used in sessions.

Supplement to traditional therapy

Studies show that AI can be used as a supplement to traditional therapy and augment therapy effectiveness. For example, a review of studies found that integrating AI into art therapy led to increases in well-being (Zubala et al., 2025).

Another study found AI could be used to engage patients to discuss session topics outside of their cognitive-behavioral group therapy, which led to improved session attendance, homework completion, and mental health outcomes (Habicht et al., 2025).

AI could also supplement traditional therapy by providing resources such as 24/7 mood tracking, guided self-help exercises, and providing real-time feedback on homework exercises completed outside of sessions. …

Detecting risk and predicting treatment outcomes

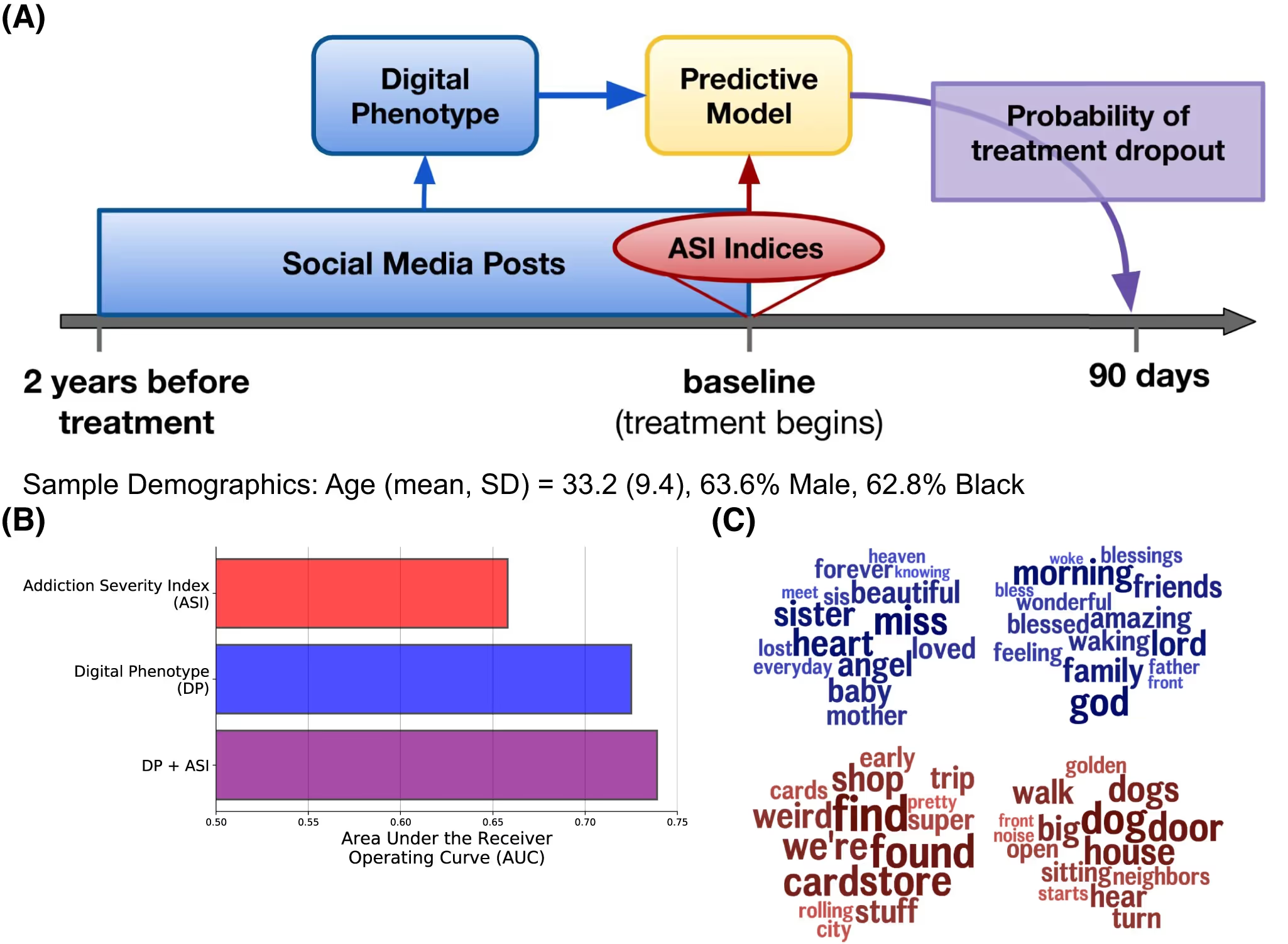

Finally, researchers have found that AI can be used effectively to predict substance use treatment outcomes and dropout (for example, Giorgi & Curtis, 2024).

A “digital phenotype” can be created and added to structured interviews or clinical assessments, and refers to a quantitative characterization of an individual’s digital behavior, particularly their language use on social media platforms.

AI also shows promise in detecting suicide risk (Abdelmoteleb et al., 2025; Atmakuru et al., 2024; Bernert et al., 2020; Lejuene et al., 2022), which can enable more timely intervention and potentially prevent suicide attempts or even death. For example, since AI may be able to detect suicide risk more quickly than a clinician, it could enable faster referrals of high-risk patients to clinicians specifically trained to treat individuals at risk for suicide.

How effective is AI in therapy? Summarizing the benefits and limitations

Now that we’ve explored the research, let’s also look at it in a summarized form so that we can make it easier to create an evaluation.

How do you feel about what has been gleaned so far? Although it is still early on, it seems that there are important ways in which AI can be leveraged to support the mental health field.

Conclusion

When integrating AI into psychotherapy, it’s best to consider AI as a supplementing tool. One that doesn’t take over decision-making, but rather helps as part of a suite of tools, resources, and systems.

This is because AI may have difficulty handling complex emotional nuance or crisis situations. There are also ethical concerns related to the use of AI in therapy, related to bias, privacy, and trust, and these must be continuously monitored as the field expands, and require a discerning human to evaluate in full.

Areas for future research

As we’ve seen, there are important limitations and challenges to consider, but the integration of AI in therapy shows promise.

Going forward, AI could be used to analyze baseline data (for example, symptoms, treatment history) to predict the type of therapy that is most likely to be effective for clients (e.g., cognitive behavioral therapy, dialectical behavioral therapy, etc.). This could impact and improve treatment outcomes and even reduce dropout.

Other ideas include the study of AI as a co-therapist or coach, allowing therapists to have a sounding board or “second opinion” in each session; the examination of how biases in AI models might affect marginalized populations in therapy; and testing frameworks to ensure ethical, inclusive, and culturally responsive AI integration.